Dear diary (blog) – I’ve neglected you. Its been over 2.5 years. I see you haven’t changed – still patiently waiting (along with my unused dental floss, stack of possible junk mail, to-read articles, holiday cards to respond to…) Habits are hard to maintain. Well, I’m back today and I might be seeing you again more frequently.

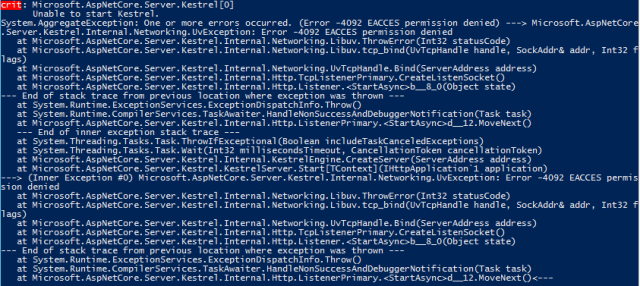

Note to reader (and mostly myself, since this entry is to keep from having to solve the same problems over again in 3 months): screenshots and example code will be added.

My software development stack changed significantly this summer. Angular and asp.net have each been in their own 2+ year limbos, while waiting for their successors. Each was released in final form this summer: Angular 2 and asp.net core. Along the way, visual studio code has established itself as a very fine code editor (in the Webstorm genre), especially for client side coding.

I have been working on a responsive version of my website, fpnotebook.com. More than a year ago, I created a first version based on angular 1 framework.

In the last month, I worked on converting the first version to one connected to a backend, to manage identity/authentication/authorization and instill backend functionality such as user notes stored in a database. I started with an asp.net core mvc app for note management, which I will discuss in another post. My intent was to get this working with the database and then add an Api that the angular app can call. Identity is the tricky part, especially communicating with client via tokens, and once Identity Server 4 is finalized, I will implement this part.

Conversion from angular 1 to angular 2 is not trivial (at least for me), and I spent the better part of a week converting version 1 to version 2 and completed a working draft: angular 2 version of FPN. It is not complete. The workshop allows selection of content, but you cannot yet generate quizes or other output. Annotations do not work.

My thoughts:

Angular Cli

At first, angular 2 seems so much more complicated than angular 1. With angular 1, I included a couple of scripts on my index page and it all worked. I knew where all my files were; started with js and ended with js.

Angular 2 is not like that. You recognize Angular 2 “quickstart” as a misnomer, after you set-up the app and tooling, set up the vs code editor for launch/debug, typescript compilation, add testing…

However, angular-cli, despite the warnings about still being in beta, worked very well (A+). Magically (via node, webpack) it sets up an environment that takes care of all of the drudgery. It really is a quickstart that includes a basic setup with browser serving, testing (karma, jasmine), typescript compiling, minification, optimization.

Need to add a component with angular cli? At the command prompt, “ng g component named-thing” adds a NamedThingComponent in its own directory, along with a spec test file, html template and css file (or less or scss). The same is available for services, directives, pipes… Angular CLI sets up most of the wiring (add to module file, include in component annotation).

Most of this works great, but there are caveats. Testing is tricky – as mocking dependencies (modules, components, services) is not straight forward. However, the main app component serves as an example for setting this up, and there are quite a few examples on the angular website.

For sometime, I was viewing the test output via the console window, frustrated that I could not see it in the typical nice jasmine output I prefer. At the same time, I was wondering what the Karma debug button did. Add the jasmine report viewer npm package and voila, the jasmine test view is back.

When I setup my workspace, I open the root working directory in windows explorer. I then open the folder in vs code and in two separate powershell windows. In one powershell window, I type ng serve (sets up the app running at localhost:4200) and in the other, ng-test (sets up a browser running karma/jasmine). I use the integrated terminal window in VS Code (Ctrl-`), which I have set to use powershell, to navigate directories and run commands such as those to generate components, directives, services…

The angular-cli set-up is a magical black box, and customization is not intuitive. Scripts and css are added to the angular config file, not to the index.html. Although I can get visual studio code to debug launch the site, I cannot get debugging to work in VS Code with typescript. I had to debug the javascript in Chrome which was workable.

CSS Frameworks

As with nearly all of my single page apps, this responsive version uses bootstrap 3. Bootstrap 4 has been in alpha for sometime. Even if I wanted to try the angular material framework, it is still in alpha. So far, of what I’ve seen with material, I like the bootstrap look better than material. Having styled responsive websites from scratch with CSS, it is a relief to have professional looking content produced in early development stages without extra effort (albeit with similar appearance to every other bootstrap page). I often use Bootswatch to at least change the Bootstrap color themes. I use the LESS precompiler and import the bootstrap/bootswatch “variables.less” file into my own LESS style sheet.

The other decision with Bootstrap, is whether to use the official bootstrap javascript file for dynamic effects (e.g. carousel, accordion/collapse), or to use ng-bootstrap/ui-bootstrap. I used angular-ui bootstrap for the last version, but the angular 2 ui-bootstrap (ng-bootstrap) is still in development for angular 2. I’ve found that most of the standard functionality of the official bootstrap.js integrates well with angular (except for more complicated solutions). In any event, for this solution, I stayed with the standard bootstrap.js.

Finally, there is the integration of css and angular 2. Angular cli makes this very easy. When components are created, a css (or less or SCSS) file is also created that is linked to the component and also namespaced for the particular component (using a CSS ID). In other words the css/less/scss for slideshow would be specific for that component. At first I had assumed that I could put CSS in a parent component and that would flow through to the child components, but that did not work. For css affecting the entire application, I used the main css file. However, I had to keep the main css as a plain css file, as I could not get the LESS or SCSS compilers to process the main styles file. There is probably a way to do this via angular cli setup (without resorting to use other tooling e.g. gulp).

Angular Code, Typescript, Observables and assorted language features

I seem to like languages written by Anders Hejlsberg. I started with Turbo Pascal in the 1980s, C# since the mid-2000s, and I really like Typescript. I like the structure (modules, classes, properties, public/private, interfaces), typing, conveniences (generics, string templates). Yet, there are times when I need to actually use a dynamic object and can just fall back to dynamics and plain old javascript. Finally, using Typescript is using ES6 and ES7 NOW, compiled to compatible code for today’s browsers.

Pairing typescript with angular 2 works very well. Using typescript with angular 1 was awkward. Typescript has modules and classes, and I found using this with angular modules and controllers to be, at times, a confusing mess. Now with angular 2 written in typescript, the code is much cleaner. Plenty of angular 1 code was no longer needed when I moved to angular 2.

Asynchronous processes are tricky. Promises were a bit confusing, but I did somewhat grasp them in the last couple of years. I at least could copy/paste examples from my own code and that of others, modify them and they worked. Observables are another story. When I follow online observable examples, I can grasp that exact example, and it works. But I am challenged when customizing the examples for my own use. Ben Lesh has some very nice intro videos online (check out those from angular connect), and he recommends getting to know a few high yield operators (map, filter, scan, mergeMap, switchMap, CombineLatest, Concat, Do). I am learning, and hopefully the next new approach to async will not come so soon that I can’t master this iteration.

Some mappings from angular 1 to 2 are simple and straightforward. Controller to Component, for example, is not a giant leap (albeit with new annotations, import statements, module definitions). Routing is much improved and I am using the angular version instead of the angular ui version (which I used for my angular 1 projects). It sounds as if the angular team focused on routing efficiency, lazy loading… and I think this shows in the final release. I like the way angular uses native html functionality (e.g. [src] maps to its native src attribute, (click) maps to its native click functionaliy)

One of my initial roadblocks in moving from angular 1 to 2 was how to implement the routing. Using angular 1 with angular UI router, I had routes that used a template with slots for 2 or 3 named outlets- each route defined what would fill each outlet. To solve this, I ended up routing to component views, which would be a basic template comprised of slots filled with other components (instead of router-outlets). For example, the page-view-component has a spot for the chapter-component and page-component.

There are areas which were more difficult to remap. Hrefs are replaced with routerLink. And routes are no longer prefixed with ‘#’ In angular 2, an href will reload the browser/entire framework and navigate to the target (browser refresh and all, lost data…); contrast with routerLink which uses the current angular routing. Although, not difficult conceptually, this remapping was time consuming. One frequent snag was that [routerLink] = “code”, not “string”.

ngBindHtml became [InnerHtml] which at first glance, appears the same, but has one significant difference: only basic html works, not angular directives. We want to avoid href links due to their reloading the browser, but if we convert these to routerLink they will not work within [innerHtml]. My fpnotebook page content for this version, sits in precompiled json code with hyperlinks. This worked very well with ngBindHtml in angular 1, and without it… the project would be hampered. Fortunately, dynamic component template solved this problem.

Angular 2 has changed significantly during its alpha and beta cycles (which is what alpha/beta are for). However, code example online searches yield many answers that will no longer work. Although this is less an issue than asp and asp.net (which has decades of deprecated examples on the web), it does make adapting solutions for the final angular 2 release more difficult.

Several VS Code plug-ins are helpful: Path intellisense (for filename typing in import statements) and Angular 2 typescript snippets.

Overall, the angular 2 experience is a very positive one, especially by using angular cli. The draft in progress is here: angular 2 version of FPN.

The next steps are to integrate with a asp.net core backend and identity server 4. I hope to add code examples and screenshots to this post in the near future.

Until next time (which hopefully, dear diary/blog, will be sooner than 2.5 years).

You must be logged in to post a comment.